Optimizing Costs: Introducing a Container Image Cache in a Hybrid CI/CD Environment

Utilizing a hybrid infrastructure offers both advantages and disadvantages. On the one hand it allows you to run mission critical workloads at a cloud provider, to benefit from high availability and flexibility, while outsourcing steady workloads with high resource demands on-premise to save costs. Consider a typical hybrid deployment scenario:

- A self-hosted GitLab instance, including its built-in container registry, is deployed in the cloud

- All GitLab CI/CD runners operate on-premise

However, this approach presents a challenge. In every pipeline job that utilizes a container image, the on-premise runner must first download the image from the GitLab registry. Typically, these images are stored in Object Storage solutions like AWS S3, Azure Blob Storage, or Google Cloud Storage. In this example we use S3 storage in AWS, which costs around $0,09 per GB egress traffic to the Internet. Although this may seem inexpensive initially, it accumulates over time. Consider this scenario:

- Project A uses a 5 GB base image

- The pipeline runs 20 times per day

- Each runner downloads the image from S3 without any caching

Under these conditions, the daily expense for just the Internet data transfer for a single project would be about $9 (5 GB x $0.09 x 20 runs). This quickly brings us to the question, if an image caching strategy can lower these costs.

Challenges for Image Caching

Many products offer caching for container images, such as the Harbor container registry. However, these solutions can introduce complexities, particularly when caching existing registries like the GitLab container registry or Docker Hub.

There are two major downsides:

- Manual Path Changes: Installing Harbor on-premise to cache images from GitLab or Docker Hub requires altering the host address in all referenced images, for instance, changing from

registry.gitlab.cloud-chronicles.comtoharbor.cloud-chronicles.com/gitlab-mirror/. - Address Discrepancies: This alteration leads to a divergence between push and pull addresses, adding to the complexity since images still need to be pushed to the original GitLab registry

A much better alternative would be a transparent cache that can be set directly in the Docker host. This setup would allow the host to redirect requests to certain registries through a designated registry proxy, eliminating the need for manual path changes.

Exactly this functionality is already built into the Docker daemon using the following setting in the /etc/docker/daemon.json file:

{

"registry-mirrors": ["http://on-premise-cache"]

}

However, this feature has a significant limitation: the Docker daemon uses this registry exclusively for requests to Docker Hub. Requests to other hosts, such as our GitLab registry, are ignored without any feedback. There is an old GitHub issue from 2015 addressing this behaviour, but so far there is no proper implementation in sight.

Implementing a Man-in-the-Middle Proxy

The best solution for this missing feature is a proxy that acts as a man-in-the-middle proxy to intercept requests to the GitLab registry and caches its data. There is a fantastic project on GitHub called docker-registry-proxy that simplifies the configuration of a nginx server by providing a prebuilt Docker image and some configuration examples. To configure the proxy for a GitLab registry you can simply start it with the following docker-compose.yml file:

services:

proxy:

container_name: registry-proxy

image: rpardini/docker-registry-proxy:0.6.2

ports:

- "3128:3128"

restart: unless-stopped

environment:

- ENABLE_MANIFEST_CACHE=true

- ALLOW_PUSH=true

- REGISTRIES=registry.gitlab.cloud-chronicles.com

- AUTH_REGISTRIES=gitlab.cloud-chronicles.com:GITLAB_USERNAME:GITLAB_PASSWORD

volumes:

- proxy-cache:/docker_mirror_cache

- proxy-ca:/ca

volumes:

proxy-cache:

proxy-ca:

There is also a step-by-step guide available how to configure the Docker daemon to use the proxy by providing the HTTP_PROXY and HTTPS_PROXYenvironment variables. Each Docker host must also include the local proxy's self-issued CA in its keychain to avoid certificate errors. Once the Docker daemon is restarted, it will route all traffic through the proxy server, efficiently storing image layers for future use. The proxy even allows setting a maximum cache size limit.

With this setup, the pipeline code can remain completely unchanged and developers will likely notice no differences in their workflow. Whenever a runner requests a cached image the download will be probably much faster and the local internet connection will not be clogged up.

Experiences and Caveats

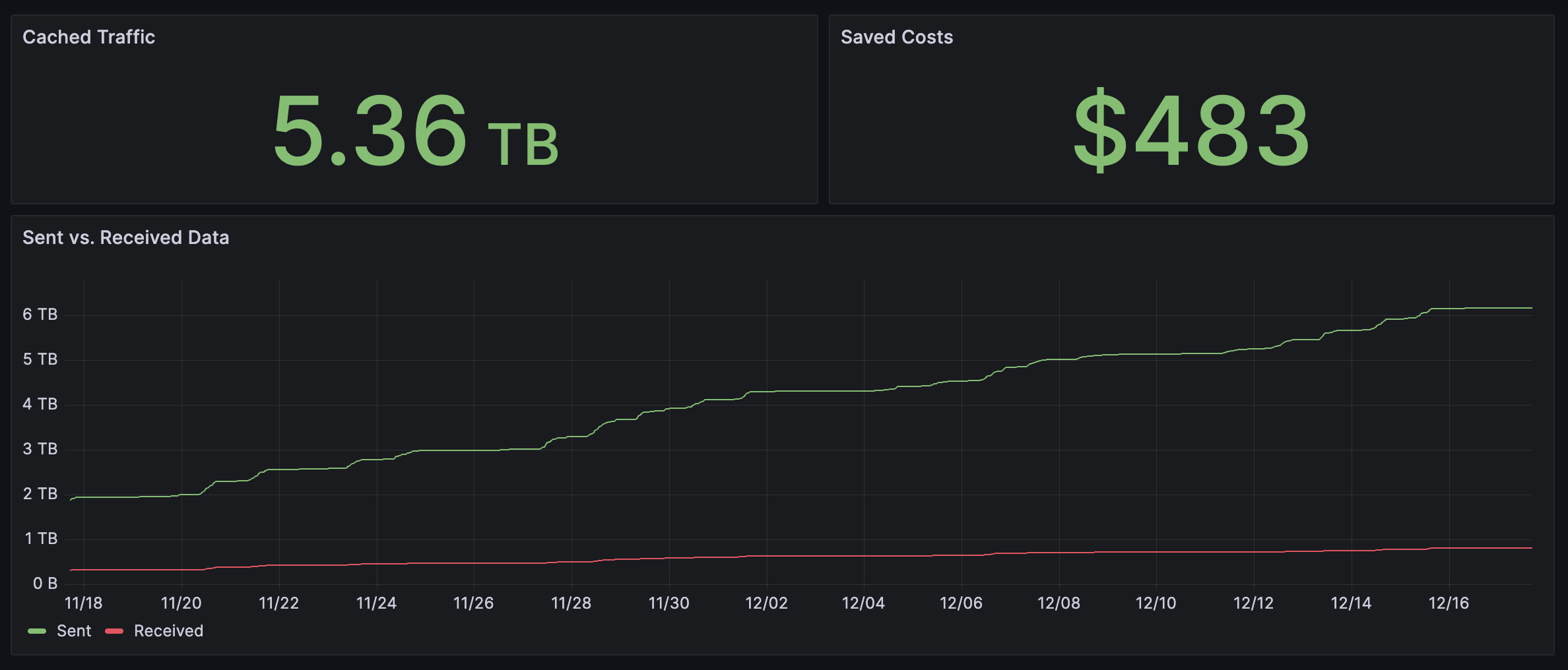

From personal experiences the setup with the docker-registry-proxy works flawlessly even after longer periods of time. You can use for example the node-exporter to create nice dashboards in Grafana to see the cached traffic and thus saved costs by simply substracting the outgoing traffic by the incoming traffic of the caching server.

However, there are two major caveats that should be considered carefully:

- The cache authenticates against the GitLab registry.

- The authentication token used by the cache needs access to all projects required by the runners.

- Each job executed on the runners inherits the same access privileges to private projects as the token used by the cache.

- The cache may return outdated images when activating the caching of image manifests.

- This especially applies when a project uses the

:latesttag, which is generally best to avoid. - The cache invalidation time for different tag patterns can be adjusted to mitigate this issue.

- This especially applies when a project uses the